Full of doubts, I probably took the reverse path that I should have and I started first with the implementation. I had the sensor nodes there, in the lab, and at that time we had (well, short of) the operating system ready, so theoretically I just had to take my algorithms in paper and write them into C using the EOS libraries. However, this path proved itself harder than expected, and not because the algorithms where all wrong, but because my inexperience with the OS libraries and the immaturity of the whole system (and myself) at the time. If I recall properly, I spent most of the Christmas trying to make the darn thing work, but it never really worked properly. Nevertheless I'm sure that the Eclipse plug-in I developed that fall saved me many headaches in debugging and downloading to the node. But that will be another story.

At some point I realized that it was impossible to get anything from analyzing the lines in the console that the nodes where outputting trough their serial port. First, it was already difficult to identify which piece of key information should be printed in the program to find out if the algorithm was working properly. But the most important limitation was that the WISSE protocol was distributed and analyzing individual nodes' output in terminal windows gave little information about what was actually going on in the network. Because the behavior of the algorithm changed according to when a node is turned on (and hence broadcasts its beacons), it was very difficult to predict was was supposed to happen and, even worse, it was almost impossible to find out why it was happening this thing or the other.

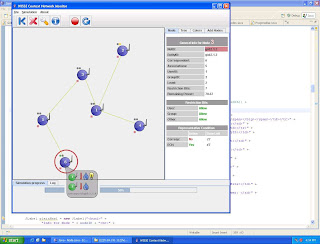

After some time thinking about it, I decided to code a monitoring software. For this purpose, I would attach a node to the monitoring machine with the radio chip turned to promiscuous mode, that would listen to anything going on and just print it out through the serial. Fortunately hacking the Chipcon's 2420 was easy (well documented). However, interpreting just line after line in a terminal, even if it was everything that was going on in the network rather than an isolated node, it was again quite confusing. Soon I decided that I would code some short of GUI that would paint each node and the packets they are exchanging (something like NAM in NS2) I was using Java, because well, is the high level language I know best and I could use it in my Linux and Windows boxes without re-codding. Unfortunately I'm a person that likes things that look good (even for self enjoyment) and not only I wasn't satisfied with plain circles for nodes, but I got stubborned in drawing fancy animated messages from one node to another. This, again, took a lot of time because I was unfamiliar with animation techniques in Java (and, still now, doesn't work as good as it should).

Finally I short of finished the monitor and I tried to debug the algorithms in the nodes to see if it could work. Unfortunately again, after all the effort I was not very lucky. The protocols wouldn't work properly, and to make things worse, I had a lot of problems with the libraries that implemented the timers because there were errors in the coding that nobody knew about because nobody really tried to use them before. After several weeks of struggle, at some point I thought that, well, if it was the algorithm the one that was flawed somewhere, why should I suffer implementing it in a limited and black-boxed sensor node instead of trying first a in higher level language in a regular computer? And, what is more, why should I do that in a foreign environment like NS2 while what I really needed is to see (and prove) that the algorithms were working as designed rather than show their performance? So, obvious now, I chose to reuse the GUI I created for the monitor and code a threaded software that would create nodes, control their life-cycle, show their interactions in real-time and collect statistics of what was happening. Finally I wrote the algorithm, found some flaws and made the whole thing work. And, of course, I ultimately proved my point that a double clustered architecture with dynamic representative election is more power efficient that other types of architecture.

Above, entity 6 sends a broadcast advertising the network. Well, it doesn't look so much sleek, but I like it better than NAM (NOTE: buttons were taken from Linux icon repositories)...